重现步骤

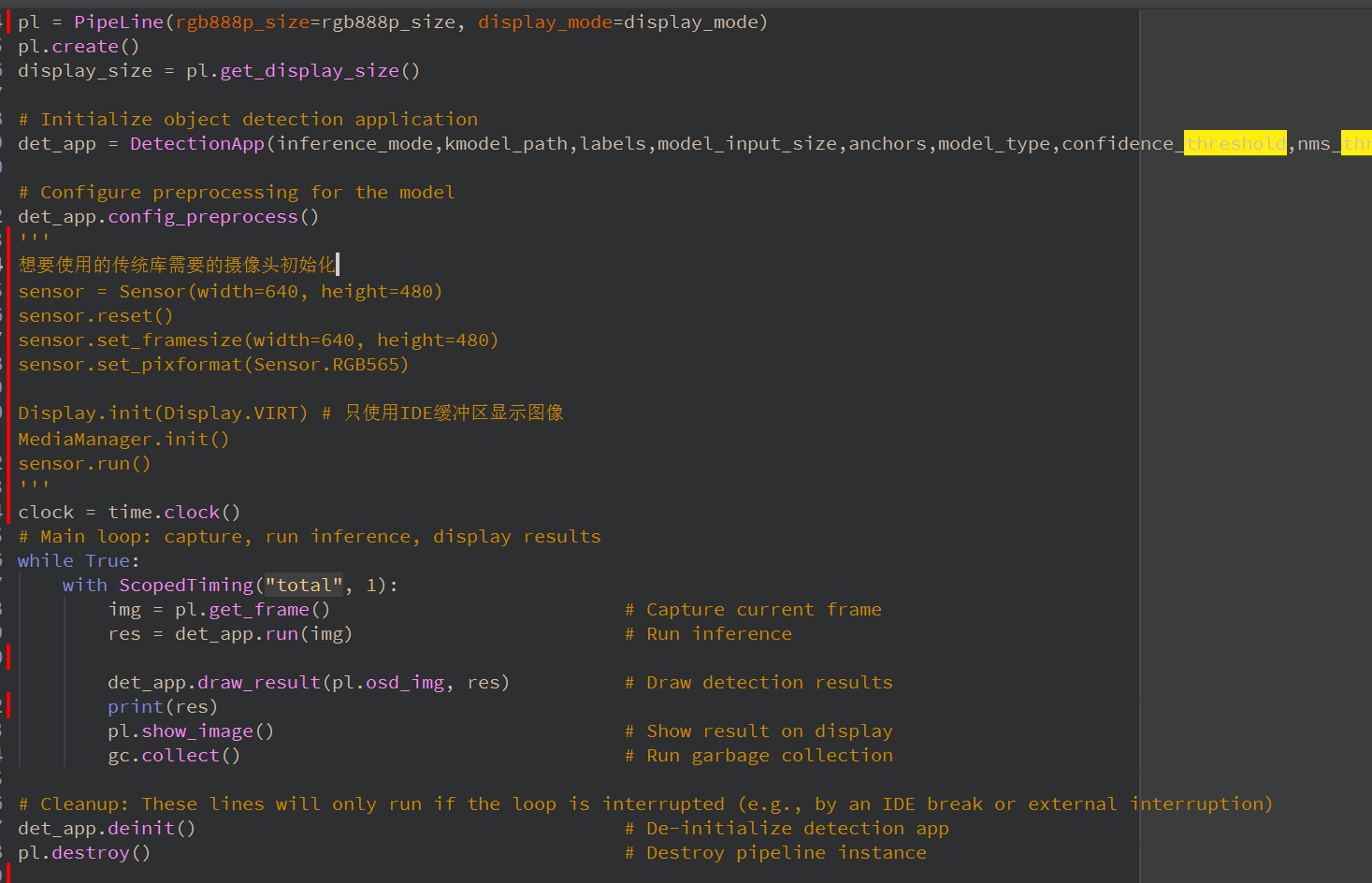

因为想要使用传统库处理 所以对摄像头初始化但这里的问题 在原先程序中他已经初始化过了 不过他初始化的获取的图像对象没有我需要方法 直接再加上一个初始化会报错显示已经初始化过了

期待结果和实际结果

<!- 你期待的结果是什么?实际看到的结果是什么? -->预期可以正常初始化 实际报错

庐山派K230

软硬件版本信息

错误日志

尝试解决过程

补充材料

重现步骤

因为想要使用传统库处理 所以对摄像头初始化但这里的问题 在原先程序中他已经初始化过了 不过他初始化的获取的图像对象没有我需要方法 直接再加上一个初始化会报错显示已经初始化过了

期待结果和实际结果

<!- 你期待的结果是什么?实际看到的结果是什么? -->预期可以正常初始化 实际报错

庐山派K230

软硬件版本信息

错误日志

尝试解决过程

补充材料

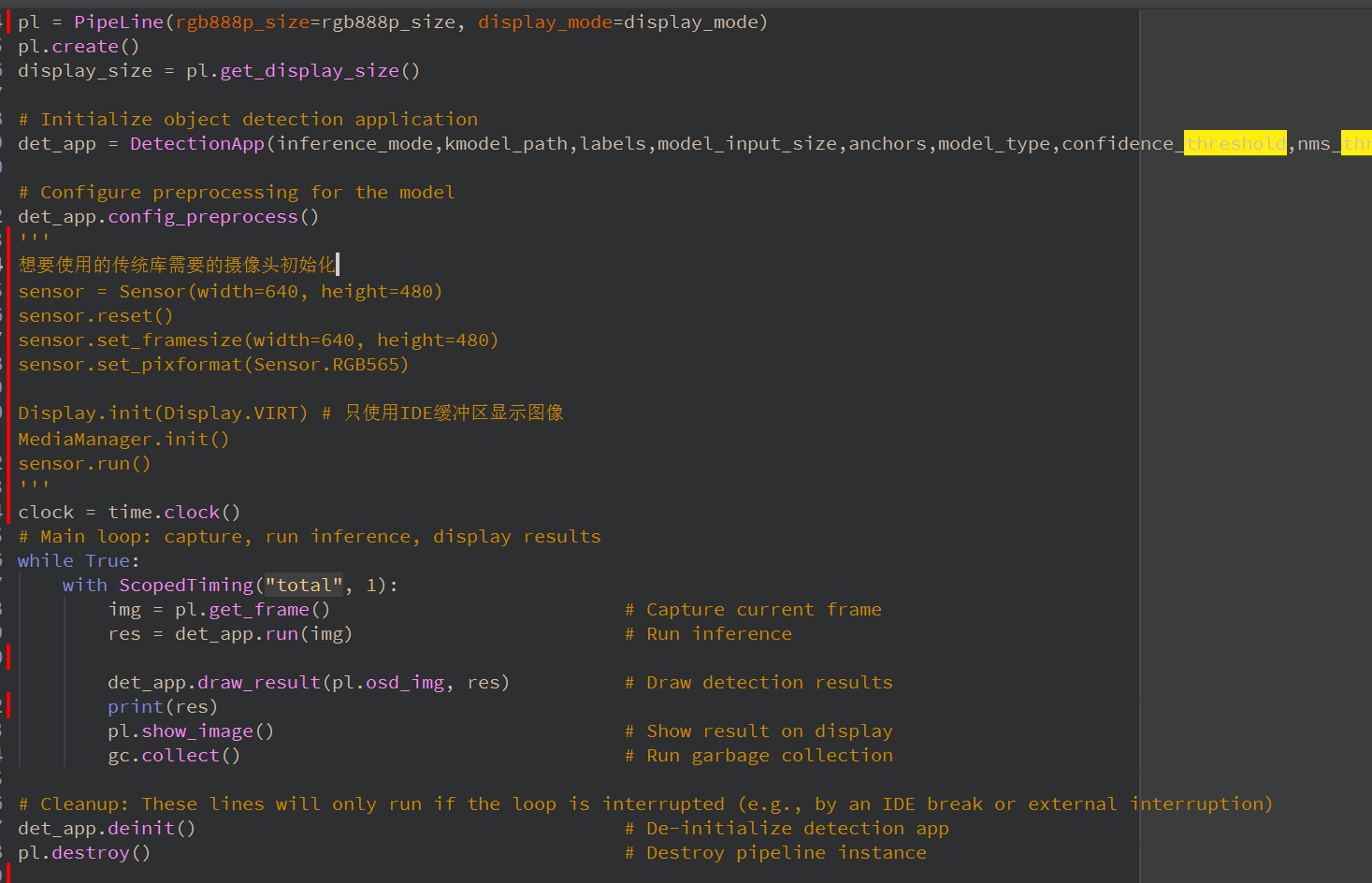

参考下面的代码:

import os,sys,gc,time,ujson

from media.sensor import *

from media.display import *

from media.media import *

from libs.PlatTasks import DetectionApp

from libs.Utils import *

import nncase_runtime as nn

import ulab.numpy as np

import image

display_size=[800,480]

rgb565_size=[640,480]

rgb888p_size=[640,360]

sensor = Sensor()

sensor.reset()

#sensor.set_hmirror(False)

#sensor.set_vflip(False)

Display.init(Display.ST7701, width=display_size[0], height=display_size[1], osd_num=1, to_ide=True)

# 通道0直接给到显示VO,格式为YUV420

sensor.set_framesize(width = display_size[0], height = display_size[1],chn=CAM_CHN_ID_0)

sensor.set_pixformat(Sensor.YUV420SP,chn=CAM_CHN_ID_0)

# 通道1 给到openmv

sensor.set_framesize(width = rgb565_size[0], height = rgb565_size[1],chn=CAM_CHN_ID_1)

sensor.set_pixformat(Sensor.RGB565,chn=CAM_CHN_ID_1)

# 通道2给到AI做算法处理,格式为RGB888

sensor.set_framesize(width = rgb888p_size[0], height = rgb888p_size[1], chn=CAM_CHN_ID_2)

sensor.set_pixformat(Sensor.RGBP888, chn=CAM_CHN_ID_2)

# OSD图像初始化

osd_img = image.Image(display_size[0], display_size[1], image.ARGB8888)

sensor_bind_info = sensor.bind_info(x = 0, y = 0, chn = CAM_CHN_ID_0)

Display.bind_layer(**sensor_bind_info, layer = Display.LAYER_VIDEO1)

# media初始化

MediaManager.init()

# 启动sensor

sensor.run()

# Set root directory path for model and config

root_path = "/sdcard/mp_deployment_source/"

# Load deployment configuration

deploy_conf = read_json(root_path + "/deploy_config.json")

kmodel_path = root_path + deploy_conf["kmodel_path"] # KModel path

labels = deploy_conf["categories"] # Label list

confidence_threshold = deploy_conf["confidence_threshold"] # Confidence threshold

nms_threshold = deploy_conf["nms_threshold"] # NMS threshold

model_input_size = deploy_conf["img_size"] # Model input size

nms_option = deploy_conf["nms_option"] # NMS strategy

model_type = deploy_conf["model_type"] # Detection model type

anchors = []

if model_type == "AnchorBaseDet":

anchors = deploy_conf["anchors"][0] + deploy_conf["anchors"][1] + deploy_conf["anchors"][2]

# Inference configuration

inference_mode = "video" # Inference mode: 'video'

debug_mode = 0 # Debug mode flag

# Initialize object detection application

det_app = DetectionApp(inference_mode,kmodel_path,labels,model_input_size,anchors,model_type,confidence_threshold,nms_threshold,rgb888p_size,display_size,debug_mode=debug_mode)

# Configure preprocessing for the model

det_app.config_preprocess()

# Main loop: capture, run inference, display results

while True:

with ScopedTiming("total", 1):

# ----------------这里拿到一帧rgb565的图像可以给openmv使用-----------

img_rgb565=sensor.snapshot(chn=CAM_CHN_ID_1)

# ---------------------------------------------------------------

# ----------------这里拿到一帧rgb888p的图像给AI模型使用---------------

img_rgb888p = sensor.snapshot(chn=CAM_CHN_ID_2) # Capture current frame

img_np=img_rgb888p.to_numpy_ref()

res = det_app.run(img_np) # Run inference

det_app.draw_result(osd_img, res) # Draw detection results

Display.show_image(osd_img, 0, 0, Display.LAYER_OSD0)

# ---------------------------------------------------------------

gc.collect() # Run garbage collection

# Cleanup: These lines will only run if the loop is interrupted (e.g., by an IDE break or external interruption)

det_app.deinit() # De-initialize detection app

sensor.stop()

# deinit lcd

Display.deinit()

time.sleep_ms(50)

# deinit media buffer

MediaManager.deinit()