问题描述

运行后报错,Traceback (most recent call last):

File "", line 188, in

File "/sdcard/libs/PipeLine.py", line 142, in show_image

File "media/display.py", line 811, in show_image

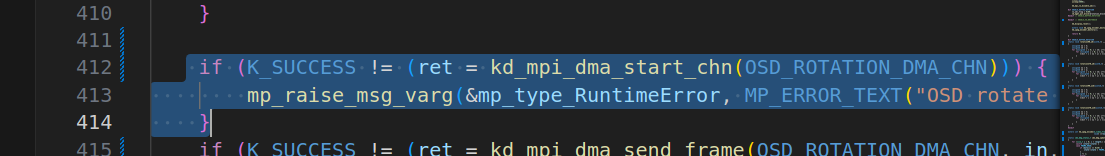

RuntimeError: OSD rotate error 3, -1

可定位到ide_dbg.c

下面是串口终端

[tuning] dev: 0

acq_win.width: 1920

acq_win.height: 1080

pipe_ctrl: 4261412857

sensor_fd: 10

sensor_type: 9

sensor_name: gc2093_csi2

database_name: gc2093-1920x1080

buffer_num: 0

buffer_size: 0

[tuning] chn: 0

out_win.width: 960

out_win.height: 536

bit_width: 0

pix_format: 5

buffer_num: 6

buffer_size: 774144

yraw_size: 0

uv_size: 0

v_size: 0

block_type: 1

wait_time: 500

chn_enable: 1

isp_3dnr_en is 1 g_isp_dev_ctx[dev_num].dev_attr.pipe_ctrl.bits.dnr3_enable is 0

VsiCamDeviceCreate hw:0-vt:0 created!

kd_mpi_isp_set_output_chn_format, width(960), height(536), pix_format(5)

kd_mpi_isp_set_output_chn_format, width(320), height(320), pix_format(16)

[dw] init, version Apr 23 2025 00:06:17

=== OSD旋转调试 ===

旋转标志: 2

输入尺寸: 960x536

输出尺寸: 536x960

输入stride: 3840

输出stride: 2144

90/270度旋转: 原高度=536, 旋转后宽度=536

旋转后宽度是否8对齐: 是

<3>[20] [Func]:gdma_vb_source_init [Line]:348 [Info]:no blk

kd_mpi_isp_stop_stream chn enable is 1

kd_mpi_isp_stop_stream chn enable is 0

kd_mpi_isp_stop_stream chn enable is 1

release reserved vb 549309440

release reserved vb 559648768

ch 3: 131 pictures encoded. Average FrameRate = 37 Fps

ch 3: total used pages 549

[mpy] exit, reset

did't init dma_dev

<3>[4] [Func]:vb_do_exit [Line]:1569 [Info]:vb already exited!

[mpy] enter script

我在终端cat /proc/umap/dma结果是和文档例程结果一样的

复现步骤

使用NT35516屏幕,执行烧录的aikmodel,实现摄像头(gc2093)摄像,ide和lcd同时显示,并运行ai。

硬件板卡

rt-smart ai学习套件

软件版本

k230_canmv_dongshanpi_sdcard__nncase_v2.9.0

其他信息

main.py

'''

本程序遵循GPL V3协议, 请遵循协议

实验平台: DshanPI CanMV

开发板文档站点 : https://eai.100ask.net/

百问网学习平台 : https://www.100ask.net

百问网官方B站 : https://space.bilibili.com/275908810

百问网官方淘宝 : https://100ask.taobao.com

'''

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

import ujson

from media.media import *

from media.display import *

from time import *

import nncase_runtime as nn

import ulab.numpy as np

import time

import utime

import image

import random

import gc

import sys

import aidemo

# ===================== 2. 核心外设配置(仅保留需要的,整洁清晰) =====================

DISPLAY_WIDTH = 960 # LCD物理宽度(原生)

DISPLAY_HEIGHT = 540 # LCD物理高度(原生)

# 对齐函数(按需保留,适配Camera要求)

def align_to_8(x):

return (x // 8) * 8

def align_to_16(x):

return (x // 16) * 16

# Sensor输出尺寸(对齐后,替换原1280x720)

SENSOR_WIDTH = DISPLAY_WIDTH

SENSOR_HEIGHT = 536

# 自定义人脸检测类,继承自AIBase基类

class FaceDetectionApp(AIBase):

def __init__(self, kmodel_path, anchors, model_input_size=[640,640], confidence_threshold=0.5, nms_threshold=0.2, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode) # 调用基类的构造函数

self.kmodel_path = kmodel_path # 模型文件路径

self.model_input_size = model_input_size # 模型输入分辨率

self.confidence_threshold = confidence_threshold # 置信度阈值

self.nms_threshold = nms_threshold # NMS(非极大值抑制)阈值

self.anchors = anchors # 锚点数据,用于目标检测

self.rgb888p_size = [align_to_16(rgb888p_size[0]), rgb888p_size[1]] # sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.display_size = [align_to_16(display_size[0]), display_size[1]] # 显示分辨率,并对宽度进行16的对齐

self.debug_mode = debug_mode # 是否开启调试模式

self.ai2d = Ai2d(debug_mode) # 实例化Ai2d,用于实现模型预处理

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8) # 设置Ai2d的输入输出格式和类型

# ========== 注释掉打印:初始化时的尺寸信息 ==========

# print(f"【初始化】rgb888p_size: {self.rgb888p_size}, 宽度16对齐后是否8对齐?{self.rgb888p_size[0]%8==0}")

# print(f"【初始化】display_size: {self.display_size}, 宽度16对齐后是否8对齐?{self.display_size[0]%8==0}")

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0): # 计时器,如果debug_mode大于0则开启

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size # 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

# ========== 注释掉打印:AI2D输入尺寸 ==========

# print(f"【AI2D】输入尺寸: {ai2d_input_size}, 宽度是否8对齐?{ai2d_input_size[0]%8==0}")

top, bottom, left, right = self.get_padding_param() # 获取padding参数

self.ai2d.pad([0, 0, 0, 0, top, bottom, left, right], 0, [104, 117, 123]) # 填充边缘

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel) # 缩放图像

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]]) # 构建预处理流程

# 自定义当前任务的后处理,results是模型输出array列表,这里使用了aidemo库的face_det_post_process接口

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

post_ret = aidemo.face_det_post_process(self.confidence_threshold, self.nms_threshold, self.model_input_size[1], self.anchors, self.rgb888p_size, results)

if len(post_ret) == 0:

return post_ret

else:

return post_ret[0]

# 绘制检测结果到画面上

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

if dets:

pl.osd_img.clear() # 清除OSD图像

# ========== 注释掉打印:PipeLine内部实际尺寸 ==========

# print(f"【PipeLine】内部rgb888p_size: {pl.rgb888p_size}, 宽度是否8对齐?{pl.rgb888p_size[0]%8==0}")

# print(f"【PipeLine】内部display_size: {pl.display_size}, 宽度是否8对齐?{pl.display_size[0]%8==0}")

for i, det in enumerate(dets):

# 将检测框的坐标转换为显示分辨率下的坐标

x, y, w, h = map(lambda x: int(round(x, 0)), det[:4])

x_origin = x # 原始值

y_origin = y

w_origin = w

h_origin = h

x = x * self.display_size[0] // self.rgb888p_size[0]

y = y * self.display_size[1] // self.rgb888p_size[1]

w = w * self.display_size[0] // self.rgb888p_size[0]

h = h * self.display_size[1] // self.rgb888p_size[1]

# ========== 注释掉打印:检测框坐标信息 ==========

# print(f"【检测框{i}】原始坐标: x={x_origin}, y={y_origin}, w={w_origin}, h={h_origin}")

# print(f"【检测框{i}】缩放后坐标: x={x}, y={y}, w={w}, h={h}")

# print(f"【检测框{i}】缩放后宽度w是否8对齐?{w%8==0}, x是否8对齐?{x%8==0}")

pl.osd_img.draw_rectangle(x, y, w, h, color=(255, 255, 0, 255), thickness=2) # 绘制矩形框

else:

pl.osd_img.clear()

# ========== 注释掉打印:无检测框时也打印PipeLine尺寸 ==========

# print(f"【无检测框】PipeLine内部display_size: {pl.display_size}, 宽度是否8对齐?{pl.display_size[0]%8==0}")

# 获取padding参数

def get_padding_param(self):

dst_w = self.model_input_size[0] # 模型输入宽度

dst_h = self.model_input_size[1] # 模型输入高度

ratio_w = dst_w / self.rgb888p_size[0] # 宽度缩放比例

ratio_h = dst_h / self.rgb888p_size[1] # 高度缩放比例

ratio = min(ratio_w, ratio_h) # 取较小的缩放比例

new_w = int(ratio * self.rgb888p_size[0]) # 新宽度

new_h = int(ratio * self.rgb888p_size[1]) # 新高度

# ========== 注释掉打印:Padding参数 ==========

# print(f"【Padding】模型输入尺寸: {self.model_input_size}, 缩放后宽度new_w={new_w}, 是否8对齐?{new_w%8==0}")

dw = (dst_w - new_w) / 2 # 宽度差

dh = (dst_h - new_h) / 2 # 高度差

top = int(round(0))

bottom = int(round(dh * 2 + 0.1))

left = int(round(0))

right = int(round(dw * 2 - 0.1))

return top, bottom, left, right

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="lcd"

# k230保持不变,k230d可调整为[640,360]

#osd层内容,保证和sensor层一致

rgb888p_size = [320, 320]

#rgb888p_size = [640,480]

display_size = [960, 540]

# ========== 注释掉打印:主程序初始尺寸 ==========

# print(f"【主程序】初始rgb888p_size: {rgb888p_size}, 宽度是否8对齐?{rgb888p_size[0]%8==0}")

# print(f"【主程序】初始display_size: {display_size}, 宽度是否8对齐?{display_size[0]%8==0}")

# 设置模型路径和其他参数

kmodel_path = "/sdcard/examples/kmodel/face_detection_320.kmodel"

# 其它参数

confidence_threshold = 0.5

nms_threshold = 0.2

anchor_len = 4200

det_dim = 4

anchors_path = "/sdcard/examples/utils/prior_data_320.bin"

anchors = np.fromfile(anchors_path, dtype=np.float)

anchors = anchors.reshape((anchor_len, det_dim))

# 初始化PipeLine,用于图像处理流程

'''Display.init(

type=Display.NT35516,

width=960,

height=540,

flag=Display.FLAG_ROTATION_0, # 强制关闭旋转,替换默认的90度

osd_num=3,

to_ide=True

)'''

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode,osd_layer_num=3)

pl.create() # 创建PipeLine实例

#pl.display_size = [536, 960]

# ========== 注释掉打印:PipeLine初始化后尺寸 ==========

# print(f"【PipeLine初始化后】rgb888p_size: {pl.rgb888p_size}, 宽度是否8对齐?{pl.rgb888p_size[0]%8==0}")

# print(f"【PipeLine初始化后】display_size: {pl.display_size}, 宽度是否8对齐?{pl.display_size[0]%8==0}")

# 初始化自定义人脸检测实例

face_det = FaceDetectionApp(kmodel_path, model_input_size=[320, 320], anchors=anchors, confidence_threshold=confidence_threshold, nms_threshold=nms_threshold, rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

face_det.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

# ========== 注释掉打印:获取帧后的尺寸 ==========

# print(f"【获取帧】img尺寸: {img.shape if hasattr(img, 'shape') else '未知'}, 宽度是否8对齐?{img.shape[2]%8==0 if hasattr(img, 'shape') else '未知'}")

res = face_det.run(img) # 推理当前帧

face_det.draw_result(pl, res) # 绘制结果

# ========== 注释掉打印:显示前最终尺寸 ==========

# print(f"【显示前】最终传给show_image的display宽度: {pl.display_size[0]}, 是否8对齐?{pl.display_size[0]%8==0}")

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

face_det.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

PipeLine.py

import os

import ujson

from media.sensor import *

from media.display import *

from media.media import *

from libs.Utils import ScopedTiming

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import time

import utime

import sys

#NT35516强制要求960x540,底层硬件和驱动要求对齐,此函数保证对齐到8

def align_up(value,alignment):

return ((value + alignment - 1) // alignment) * alignment

def align_to_8(value):

return (value // 8) * 8

def align_to_16(value):

return (value // 16) * 16

# PipeLine类

class PipeLine:

def __init__(self,rgb888p_size=[224,224],display_mode="hdmi",display_size=None,osd_layer_num=1,debug_mode=0):

# sensor给AI的图像分辨率

self.rgb888p_size=[align_to_16(rgb888p_size[0]),rgb888p_size[1]]

#self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO图像分辨率

if display_size is None:

self.display_size=None

else:

self.display_size=[display_size[0],display_size[1]]

# 视频显示模式,支持:"lcd"(default st7701 800*480),"hdmi"(default lt9611),"lt9611","st7701","hx8399"

self.display_mode=display_mode

# sensor对象

self.sensor=None

# osd显示Image对象

self.osd_img=None

self.cur_frame=None

self.debug_mode=debug_mode

self.osd_layer_num = osd_layer_num

# PipeLine初始化函数

def create(self,sensor=None,hmirror=None,vflip=None,fps=60):

with ScopedTiming("init PipeLine",self.debug_mode > 0):

nn.shrink_memory_pool()

# 初始化并配置sensor

brd=os.uname()[-1]

if brd=="k230d_canmv_bpi_zero":

self.sensor = Sensor(fps=30) if sensor is None else sensor

elif brd=="k230_canmv_lckfb":

self.sensor = Sensor(fps=30) if sensor is None else sensor

elif brd=="k230d_canmv_atk_dnk230d":

self.sensor = Sensor(fps=30) if sensor is None else sensor

else:

self.sensor = Sensor(fps=fps) if sensor is None else sensor

self.sensor.reset()

if hmirror is not None and (hmirror==True or hmirror==False):

self.sensor.set_hmirror(hmirror)

if vflip is not None and (vflip==True or vflip==False):

self.sensor.set_vflip(vflip)

# 初始化显示

if self.display_mode=="hdmi":

# 设置为LT9611显示,默认1920x1080

if self.display_size==None:

Display.init(Display.LT9611,osd_num=self.osd_layer_num, to_ide = True)

else:

Display.init(Display.LT9611, width=self.display_size[0], height=self.display_size[1],osd_num=self.osd_layer_num, to_ide = True)

elif self.display_mode=="lcd":

#改成NT35516(rtsmart ai套件板载的是NT35516)

if self.display_size==None:

Display.init(Display.NT35516,osd_num=self.osd_layer_num,to_ide =False)

else:

Display.init(Display.NT35516, width=self.display_size[0], height=self.display_size[1],osd_num=self.osd_layer_num, to_ide = False)

# 默认设置为ST7701显示,480x800

#if self.display_size==None:

# Display.init(Display.ST7701, osd_num=self.osd_layer_num, to_ide=True)

#else:

# Display.init(Display.ST7701, width=self.display_size[0], height=self.display_size[1], osd_num=self.osd_layer_num, to_ide=True)

elif self.display_mode=="lt9611":

# 设置为LT9611显示,默认1920x1080

if self.display_size==None:

Display.init(Display.LT9611,osd_num=self.osd_layer_num, to_ide = True)

else:

Display.init(Display.LT9611, width=self.display_size[0], height=self.display_size[1],osd_num=self.osd_layer_num, to_ide = True)

elif self.display_mode=="st7701":

# 设置为ST7701显示,480x800

if self.display_size==None:

Display.init(Display.ST7701, osd_num=self.osd_layer_num, to_ide=True)

else:

Display.init(Display.ST7701, width=self.display_size[0], height=self.display_size[1], osd_num=self.osd_layer_num, to_ide=True)

elif self.display_mode=="hx8399":

# 设置为HX8399显示,默认1920x1080

if self.display_size==None:

Display.init(Display.HX8399, osd_num=self.osd_layer_num, to_ide=True)

else:

Display.init(Display.HX8399, width=self.display_size[0], height=self.display_size[1], osd_num=self.osd_layer_num, to_ide=True)

else:

# 设置为LT9611显示,默认1920x1080

Display.init(Display.LT9611,osd_num=self.osd_layer_num, to_ide = True)

self.display_size=[Display.width(),Display.height()]

if self.display_mode=="lcd":

# 通道0直接给到显示VO,格式为YUV420

self.sensor.set_framesize(width = self.display_size[0], height = align_to_8(self.display_size[1]))

self.sensor.set_pixformat(PIXEL_FORMAT_YUV_SEMIPLANAR_420)

else:

self.sensor.set_framesize(w = self.display_size[0], h = self.display_size[1])

self.sensor.set_pixformat(PIXEL_FORMAT_YUV_SEMIPLANAR_420)

# 通道2给到AI做算法处理,格式为RGB888

self.sensor.set_framesize(w = self.rgb888p_size[0], h = self.rgb888p_size[1], chn=CAM_CHN_ID_2)

# set chn2 output format

self.sensor.set_pixformat(PIXEL_FORMAT_RGB_888_PLANAR, chn=CAM_CHN_ID_2)

# OSD图像初始化

osd_width = self.display_size[0]

osd_height = align_to_8(self.display_size[1])

print(f"OSD图像尺寸: {osd_width}x{osd_height}, 高度是否8对齐: {osd_height%8==0}")

self.osd_img = image.Image(osd_width, osd_height, image.ARGB8888)

sensor_bind_info = self.sensor.bind_info(x = 0, y = 0, chn = CAM_CHN_ID_0)

Display.bind_layer(**sensor_bind_info, dstlayer = Display.LAYER_VIDEO1)

# media初始化

MediaManager.init()

# 启动sensor

self.sensor.run()

# 获取一帧图像数据,返回格式为ulab的array数据

def get_frame(self):

with ScopedTiming("get a frame",self.debug_mode > 0):

self.cur_frame = self.sensor.snapshot(chn=CAM_CHN_ID_2)

input_np=self.cur_frame.to_numpy_ref()

return input_np

# 在屏幕上显示osd_img

def show_image(self):

with ScopedTiming("show result",self.debug_mode > 0):

Display.show_image(self.osd_img, 0, 0, Display.LAYER_OSD3)

def get_display_size(self):

return self.display_size

# PipeLine销毁函数

def destroy(self):

with ScopedTiming("deinit PipeLine",self.debug_mode > 0):

os.exitpoint(os.EXITPOINT_ENABLE_SLEEP)

# stop sensor

self.sensor.stop()

# deinit lcd

Display.deinit()

utime.sleep_ms(50)

# deinit media buffer

MediaManager.deinit()